The goalposts moved in capital markets with the launch of ChatGPT. I'm not the first to say that, and some will say I'm a hype monkey. However, I believe it and events like this don't happen everyday. As per the sports pitch sidelines, capital markets leaders

will re-assess their set-plays and identify new tactics to determine winners and losers amidst a tornado of change. I’ve lived through three such changes in my work career, the creation of new financial instruments, then the introduction of HFT, and post-GFC

wave of regulation. What’s interesting is that in all three cases, AI was on the sidelines, the substitute desperate to come on, to change the game. When it did, the captains and experienced players on the pitch kept it in check, however exciting the talent.

Generative AI is a fourth massive career tornado, and with it AI drives the game. Following the sports analogy, it brings together an elevated supersub – the hitherto checked talent of discriminative AI – driving the play with the new kid with seemingly

unlimited potential – generative AI. Together, they deliver creativity, content and, only when they play together, hard answers that drive value. No longer do the aging Lionel Messis and Tom Bradys of Capital Markets (I won’t name any) drive the new plays.

The supersubs and wizzkids of all genders and ethnicities are in town, reinvigorating a relatively static industry.

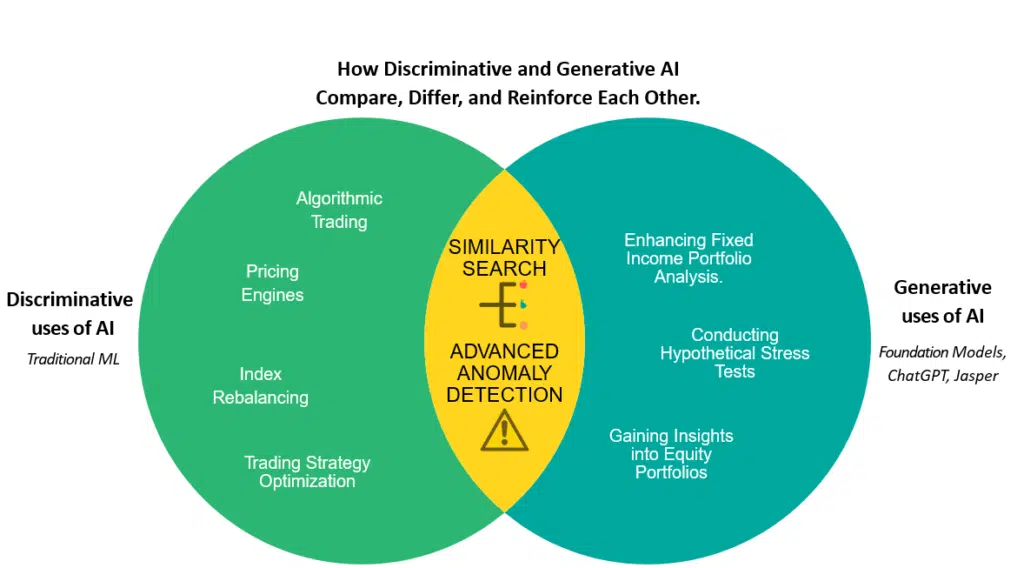

Let’s explore how Discriminative and Generative AI compare, differ, and reinforce each other.

In simple terms, both build on machine and deep learning models that seek to understand and learn from data, and deploy that understanding in other situations. In the case of the new exciting, young generative AI, new data gets created as text, code, music,

and other types of content, while the worldly-wise, evolved-over-many-years discriminative AI finds real answers and solutions in new data sets.

When both types of AI intersect, tht's when magic happens. On the GenAI side, Large Language Models (LLMs), on the Discriminative AI side, large data models (I will call them LDMs in the remainder of this article for simplicity, but they're not to be confused

with logical data models, which is a common use of the LDM acronym and means something different). When LLMs and LDMs are deployed together, they formulate both valuable specific solutions alongside content-ful scenarios, context and meaning.

We explore below three real identified key use-cases in capital markets. We show how they bring both types of AI together to help financial professionals make informed decisions, with context. These are not dreamed up art-of-the-possible cases, but real

scenarios which brokers and buy-side leaders are working on as I write.

Example 1: Enhance Fixed Income Portfolio Analysis.

Consider a scenario where a bank evaluates a fixed income portfolio consisting of 100 OTC bonds, each with non-standard terms embedded within PDF documents. They can ask LLMs, through encoders known as vector embeddings, to classify and categorize these

bonds pretty accurately. By working with the data directly - the LDMs - and performing a combination of similarity search and prescriptive techniques such as optimization, the bank can identify pricing information for instruments closely matching the portfolio

constituents. A pertinent prompt from a portfolio manager might be, “Can you give me pricing details and a summary of risks (liquidity, counterparty/credit risk, market risk) for the portfolio, along with confidence intervals?”

Example 2: Gain Insights into Equity Portfolios

For investors managing equity portfolios, let’s say you have a portfolio of 30 equities, and you want to analyze the exposure. Deploy a prompt like “Based on my portfolio, how much revenue is generated from the ASEAN region today?” or “Could you provide

me with a pie chart of employees broken down by country from my portfolio?” Additionally, you could delve into ESG factors to estimate the ranking of companies in your portfolio from, say, a gender equality perspective. In this case, a central vector database

- assuming it can handle multiple vector data types, configured and indexed encoded vector embeddings and vector data that directly incorporates the time-series of the portfolio constituents and any accompanying factors - could direct a prompt accordingly

through the LLM or the LDM. For example, the LLM might suggest new ESG factors, but the LDM can work directly on your organization's factor universe.

Example 3: Conduct Hypothetical Stress Tests

By leveraging historical market events and real-time data, LLMs and LDMs can assist in understanding potential portfolio outcomes, for example by conducting hypothetical stress tests. Suppose you have a portfolio of 30 equities and want to assess the impact

of specific events. Prompt with “what would happen to my portfolio if AWS missed their earnings next week?” or “How would my portfolio be affected if the ECB raised interest rates unexpectedly next week?” Moreover, you could identify specific stocks in your

portfolio universe with the highest price sensitivity to events such as a data breach at the push of a button or a verbal prompt. That's empowering. Again, such contextual analysis draws on LLM inference to creatively create scenarios, but you can infuse with

your market and portfolio data.

The Importance of a Vector Database that Manages More than Just, Ahem, Vector (Embeddings)

In the use cases above, I've introduced the notion of a vector database without actually explaining it. The word vector is an overloaded term in maths and computer science. On one (mostly mathematical) hand its a simple means of understanding magnitude and

direction, for example in geometry and mechanics. On the other (mostly computer science) hand, a vector represents a sequence of numbers, perhaps daily high, low or close numbers for example and can be of infinitessimal length. For those that use mathematical

programming languages like R, MATLAB, NumPy (Python), Julia or q, the latter is familiar though you've likely also studied math so you also understand the former.

The new type of vector database that powers the Large Language Model (and can power a large data model) kind of blends the two. It takes the notion of a "vector embedding" which encodes up your thing (via a neural network training process) - whether it be

a word or a concept - into a mapped or derived sequence of numbers, so more than just magnitude and direction. Your vector database can then search it and recreate the words, code, thing, in conjunction with other things that are similar to deploy a piece

of integrated content. The vector embedding notion is not new though. I was demo-ing word2vec (stands for Word to Vector) library capabilities back in about 2015 to build semantic-based trading strategies, leveraging tweet events for example. How quickly we

forget these things.

However, for R, MATLAB, NumPy Julia and q users, that's limiting. Vectors handle time-series, sequenced data and truly help model distance and magnitude. They also allow for fast mathematical operations, so-called vector processing, which helps you manipulate

data far more quickly and efficiently than you get with formal loops (like in C++) or row-based queries (like with SQL) that wastefully dominates so much of modern computing. This is also how quants tend to understand their portfolio data, trade data, market

data, risk data, event data and so much more. In this way, vector processing tends to sit in the domain of linear algebra and matrix algebra, the conceptual foundation not just for quant finance but also engineers designing control systems, image and video

processing (images are matrixes of data, with videos sequences over time), signal processing (how signals, e.g. sounds, change over time) and dynamic systems more generally. Matrix algebra also defines the sequencing protocols behind neural networks, the very

(mathematical) thing that drives the LLM.

So a proper vector database, to my mind, handles both vector embeddings and vector processing, and in this way should be able to process the LLM in addition to real data beyond the LLM vector embeddings, the large data model.

Most new vector databases focus on the former which to my mind is useless beyond being a sort of historic memory for an LLM. Proper vector databases, if they're true to their vector roots, do both, and thus support large language and large data models.

The future is bright for vectors, which by the way goes right back to Turing and his ambitions for the Automatic Computing Engine (ACE) in addition to the key foundations of mathematical computing and control engineering, so in many ways we're going back

to our routes.